| 标题 | 说明 | 附加 |

|---|---|---|

| 《Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift》 | 原文 | 2015 |

| Batch Normalization 论文翻译 | DCD_Lin | |

| 深入理解Batch Normalization批标准化 | 郭耀华 | |

| 优化深度神经网络(三)Batch Normalization | 喵喵帕斯 | |

| Batch Normalization 学习笔记 | hjimce | 2016 |

白化

我们已经了解了如何使用PCA降低数据维度。在一些算法中还需要一个与之相关的预处理步骤,这个预处理过程称为白化(一些文献中也叫sphering)。举例来说,假设训练数据是图像,由于图像中相邻像素之间具有很强的相关性,所以用于训练时输入是冗余的。白化的目的就是降低输入的冗余性;更正式的说,我们希望通过白化过程使得学习算法的输入具有如下性质:(i)特征之间相关性较低;(ii)所有特征具有相同的方差。s

Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift

Sergey Ioffe, Christian Szegedy

(Submitted on 11 Feb 2015 (v1), last revised 2 Mar 2015 (this version, v3))

Training Deep Neural Networks is complicated by the fact that the distribution of each layer’s inputs changes during training, as the parameters of the previous layers change. This slows down the training by requiring lower learning rates and careful parameter initialization, and makes it notoriously hard to train models with saturating nonlinearities. We refer to this phenomenon as internal covariate shift, and address the problem by normalizing layer inputs. Our method draws its strength from making normalization a part of the model architecture and performing the normalization for each training mini-batch. Batch Normalization allows us to use much higher learning rates and be less careful about initialization. It also acts as a regularizer, in some cases eliminating the need for Dropout. Applied to a state-of-the-art image classification model, Batch Normalization achieves the same accuracy with 14 times fewer training steps, and beats the original model by a significant margin. Using an ensemble of batch-normalized networks, we improve upon the best published result on ImageNet classification: reaching 4.9% top-5 validation error (and 4.8% test error), exceeding the accuracy of human raters.

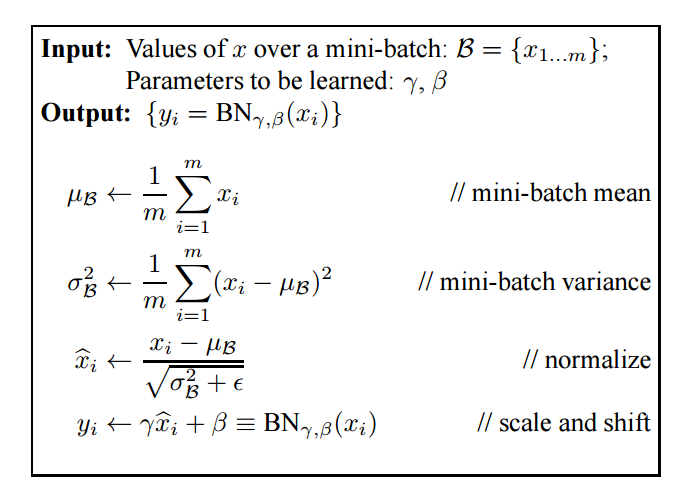

当前神经网络层之前的神经网络层的参数变化,引起神经网络每一层输入数据的分布产生了变化,这使得训练一个深度神经网络(Deep Neural Networks)变得复杂。这样就要求使用更小的学习率,参数初始化也需要更为谨慎的设置。并且由于非线性饱和(注:如sigmoid激活函数的非线性饱和问题),训练一个深度神经网络会非常困难。我们称这个现象为:internal covariate shif;同时利用归一化层输入解决这个问题。我们将归一化层输入作为神经网络的结构,并且对每一个小批量训练数据执行这一操作。Batch Normalization(BN) 能使用更高的学习率,并且不需要过多的注重参数初始化问题。BN 的过程与正则化相似,在某些情况下可以去除Dropout。将BN应用到一个state-of-the-art的图片分类模型中时,使用BN只要1/14的训练次数就能够达到同样的精度。使用含有BN神经网络模型能提升现有最好的ImageNet分类结果:在top-5 验证集中达到4.9%的错误率(测试集为4.8%),超出了人类的分类精度。

Subjects: Machine Learning (cs.LG)

Cite as: arXiv:1502.03167 [cs.LG]

(or arXiv:1502.03167v3 [cs.LG] for this version)