NAACL 2018最佳论文 Deep contextualized word representations:艾伦人工智能研究所提出新型深度语境化词表征(研究者使用从双向 LSTM 中得到的向量,该 LSTM 是使用成对语言模型(LM)目标在大型文本语料库上训练得到的。因此,该表征叫作 ELMo(Embeddings from Language Models)表征。)。

ELMo 核心思想

最推荐 ELMo TensorFlow Hub 的使用方法

Overview

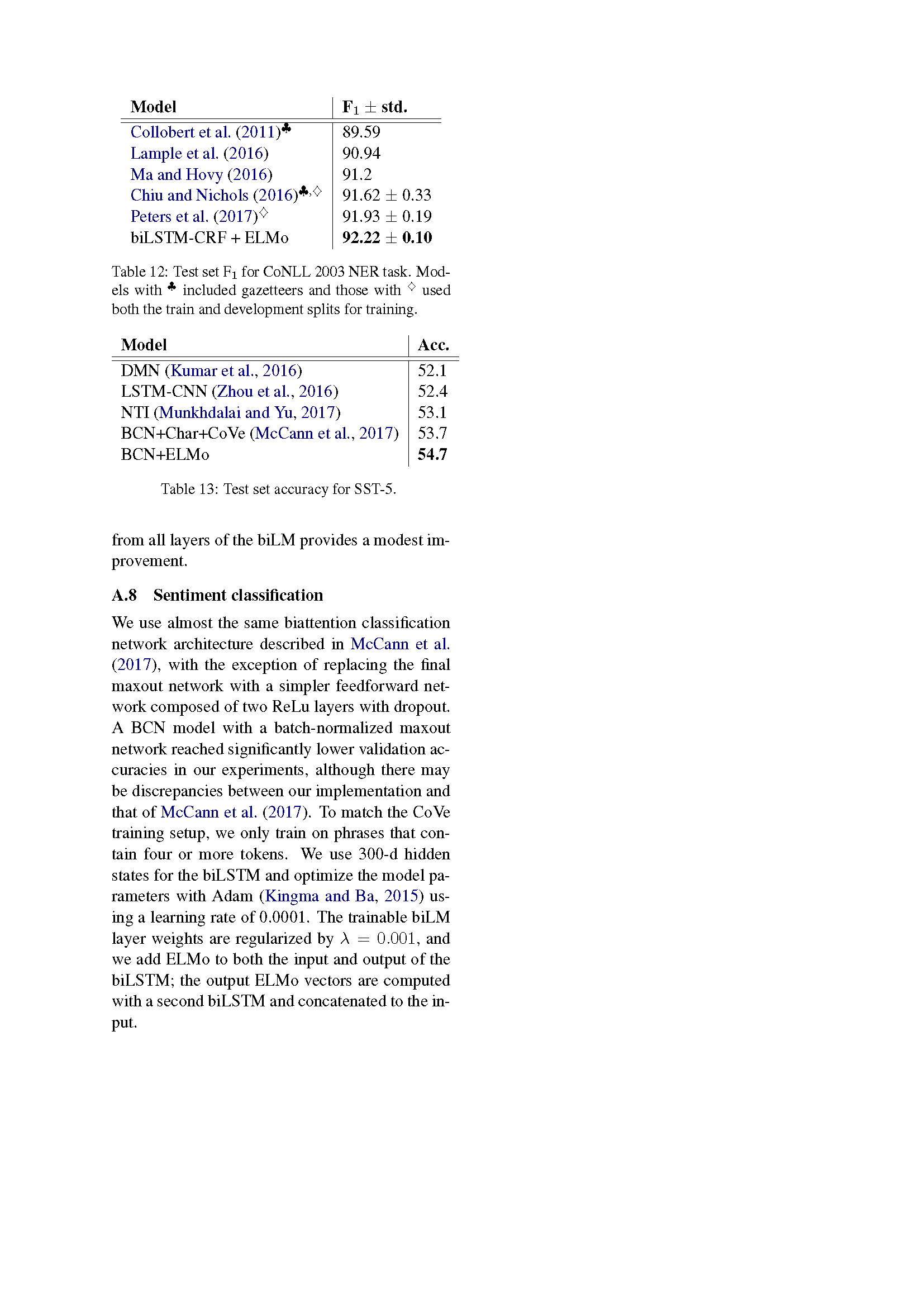

Computes contextualized word representations using character-based word representations and bidirectional LSTMs, as described in the paper “Deep contextualized word representations” [1].

This modules supports inputs both in the form of raw text strings or tokenized text strings.

The module outputs fixed embeddings at each LSTM layer, a learnable aggregation of the 3 layers, and a fixed mean-pooled vector representation of the input.

The complex architecture achieves state of the art results on several benchmarks. Note that this is a very computationally expensive module compared to word embedding modules that only perform embedding lookups. The use of an accelerator is recommended.

Trainable parameters

The module exposes 4 trainable scalar weights for layer aggregation.

Example use1

2

3

4

5elmo = hub.Module("https://tfhub.dev/google/elmo/2", trainable=True)

embeddings = elmo(

["the cat is on the mat", "dogs are in the fog"],

signature="default",

as_dict=True)["elmo"]

1 | elmo = hub.Module("https://tfhub.dev/google/elmo/2", trainable=True) |

Input

The module defines two signatures: default, and tokens.

With the default signature, the module takes untokenized sentences as input. The input tensor is a string tensor with shape [batch_size]. The module tokenizes each string by splitting on spaces.

With the tokens signature, the module takes tokenized sentences as input. The input tensor is a string tensor with shape [batch_size, max_length] and an int32 tensor with shape [batch_size] corresponding to the sentence length. The length input is necessary to exclude padding in the case of sentences with varying length.

Output

The output dictionary contains:

- word_emb: the character-based word representations with shape [batch_size, max_length, 512].

- lstm_outputs1: the first LSTM hidden state with shape [batch_size, max_length, 1024].

- lstm_outputs2: the second LSTM hidden state with shape [batch_size, max_length, 1024].

- elmo: the weighted sum of the 3 layers, where the weights are trainable. This tensor has shape [batch_size, max_length, 1024]

- default: a fixed mean-pooling of all contextualized word representations with shape [batch_size, 1024].

用代码来解释

更多内容见我的 GitHub

1 | import tensorflow as tf |

广义 ELMo 使用方法

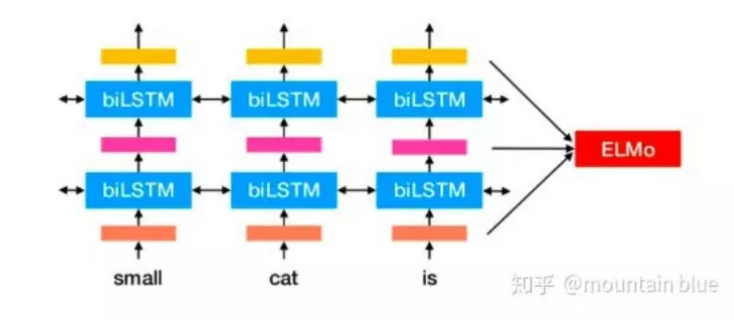

如何使用ELMo的词向量呢?( 论文 3.3 有详细描述)

在supervised learning的情况下,可以各种自如的使用:

- 直接将ELMo词向量 ELMo_k 与普通的词向量 x_k拼接(concat)[ x_k;ELMo_k ]。

- 直接将ELMo词向量ELMo_k 与隐层输出向量 h_k 拼接[ h_k;ELMo_k ],在SNLI,SQuAD上都有提升。

代码使用实例:

| 标题 | 说明 | 附加 |

|---|---|---|

| Deep contextualized word representations | 该研究提出了一种新型深度语境化词表征,可对词使用的复杂特征(如句法和语义)和词使用在语言语境中的变化进行建模(即对多义词进行建模)。这些表征可以轻松添加至已有模型,并在 6 个 NLP 问题中显著提高当前最优性能。 | 20180215 |

| TensorFlow Hub 实现 | Embeddings from a language model trained on the 1 Billion Word Benchmark. | |

| allennlp.org - elmo | 论文官网 elmo | |

| bilm-tf | 论文官方实现 Tensorflow implementation of contextualized word representations from bi-directional language models | |

| NAACL 2018最佳论文:艾伦人工智能研究所提出新型深度语境化词表征 | 机器之心解读 | 20180607 |

| 把 ELMo 作为 keras 的一个嵌入层使用 | GitHub | 201804 |

| NAACL18 Best Paper: ELMo | Liyuan Liu 解读 | |

| 论文笔记ELMo | 赵来福 详细解读 | |

| ELMo 最好用的词向量《Deep Contextualized Word Representations》 | mountain blue 详细解读 | |

| visualizing-elmo-contextual-vectors | 可视化ELMo上下文向量 | 20190417 |

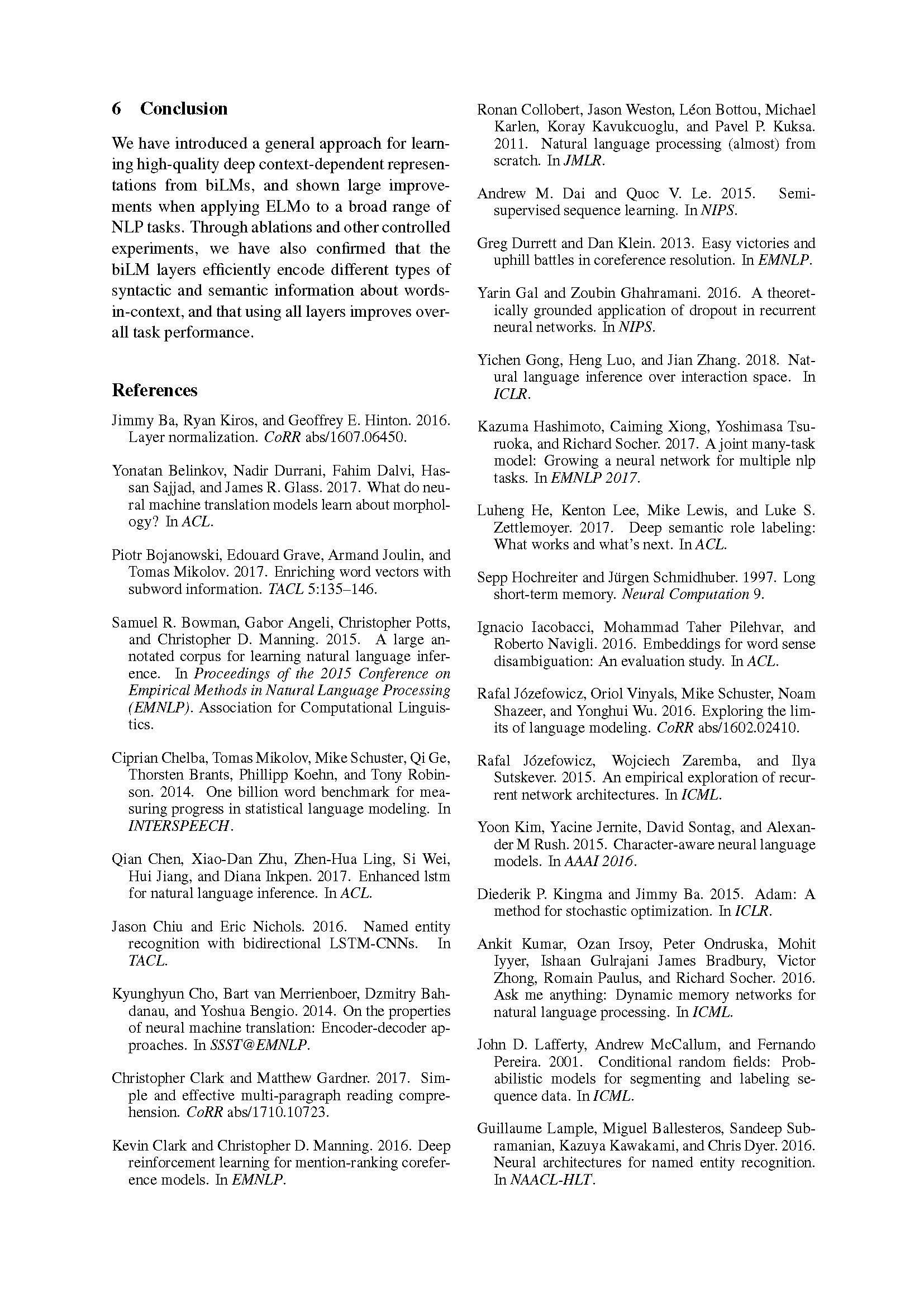

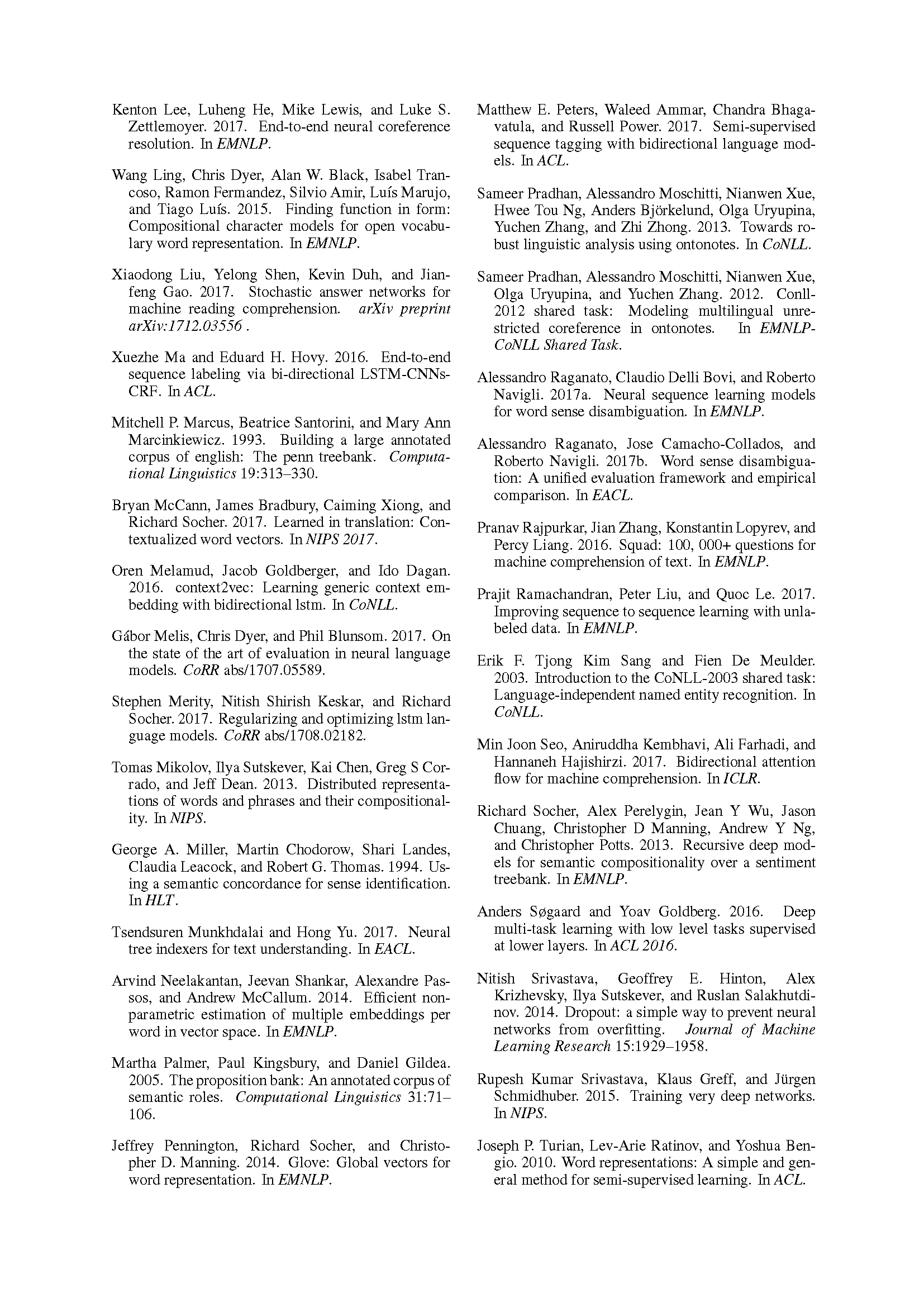

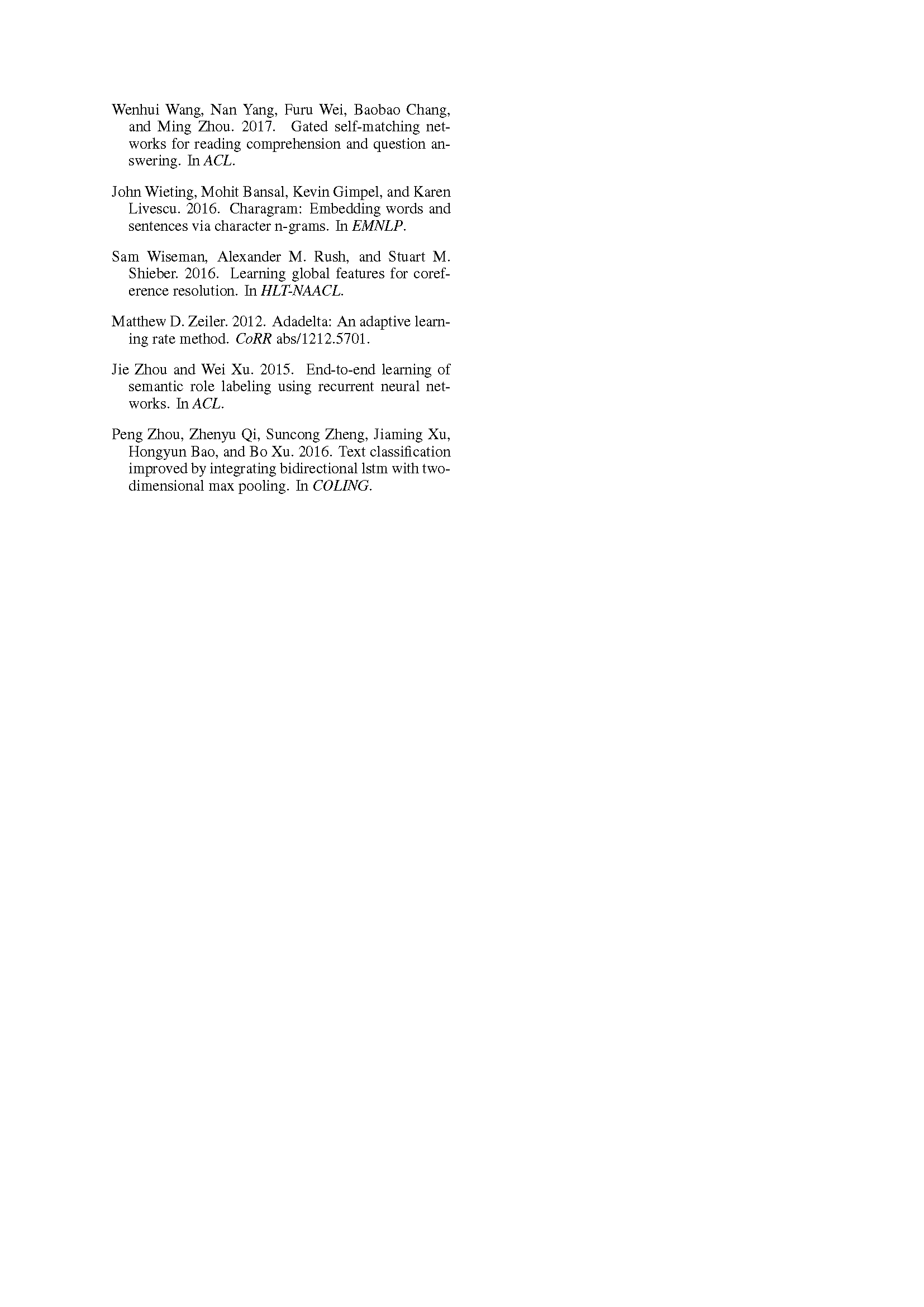

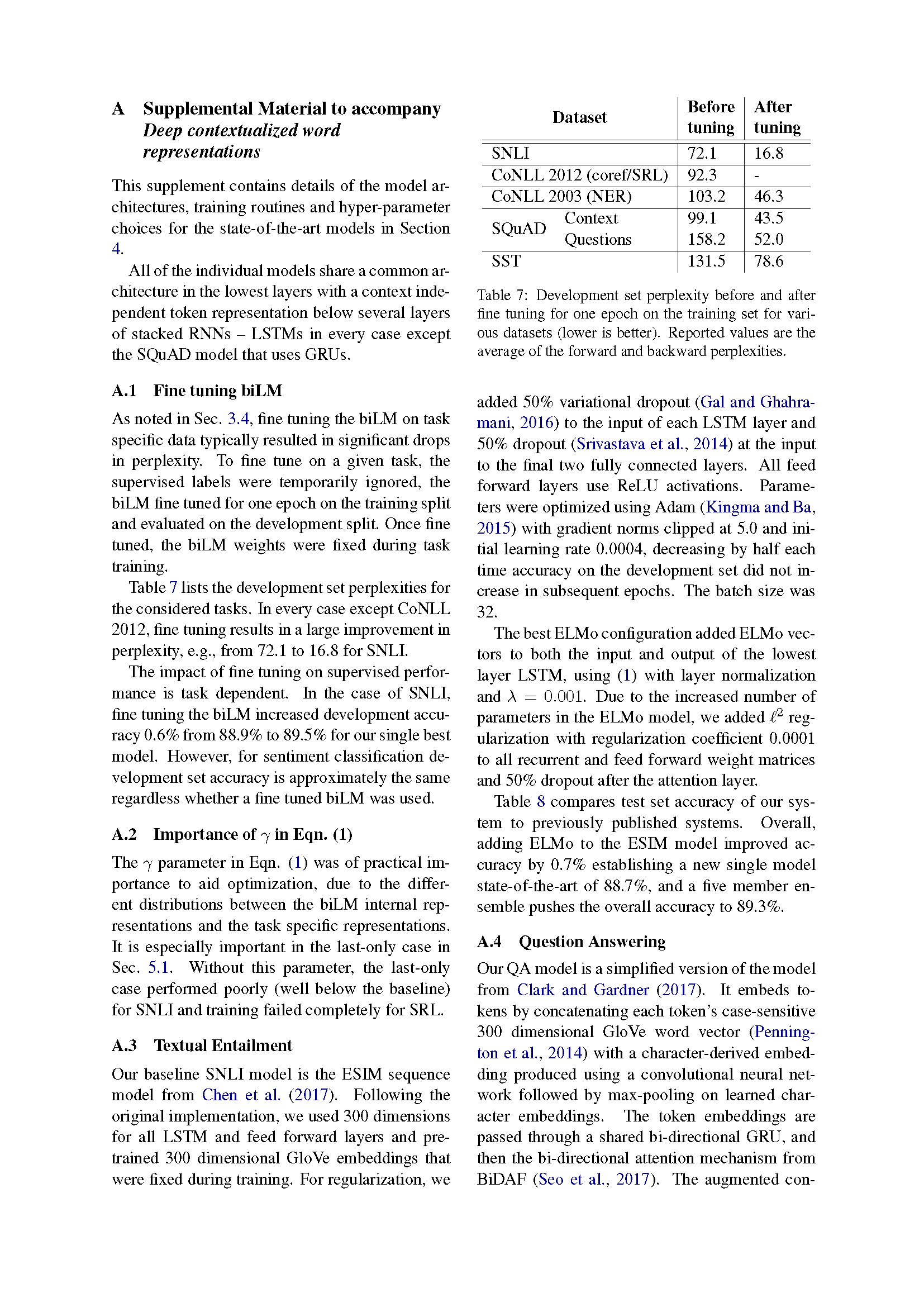

Deep contextualized word representations

Matthew E. Peters, Mark Neumann, Mohit Iyyer, Matt Gardner, Christopher Clark, Kenton Lee, Luke Zettlemoyer

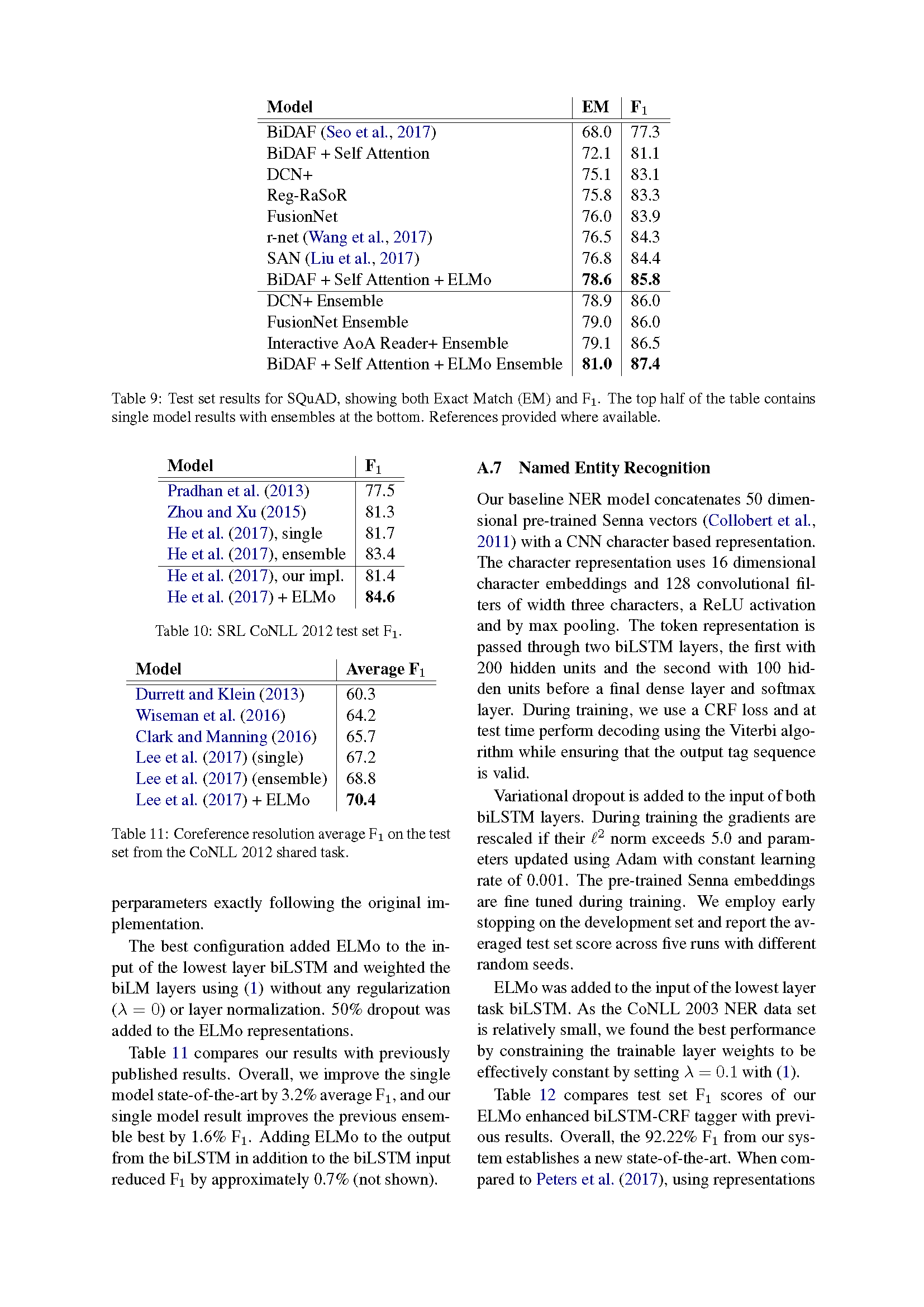

Our word vectors are learned functions of the internal states of a deep bidirectional language model (biLM), which is pre-trained on a large text corpus. We show that these representations can be easily added to existing models and significantly improve the state of the art across six challenging NLP problems, including question answering, textual entailment and sentiment analysis. We also present an analysis showing that exposing the deep internals of the pre-trained network is crucial, allowing downstream models to mix different types of semi-supervision signals.

在本论文中,我们介绍了一种新型深度语境化词表征,可对词使用的复杂特征(如句法和语义)和词使用在语言语境中的变化进行建模(即对多义词进行建模)。我们的词向量是深度双向语言模型(biLM)内部状态的函数,在一个大型文本语料库中预训练而成。本研究表明,这些表征能够被轻易地添加到现有的模型中,并在六个颇具挑战性的 NLP 问题(包括问答、文本蕴涵和情感分析)中显著提高当前最优性能。此外,我们的分析还表明,揭示预训练网络的深层内部状态至关重要,可以允许下游模型综合不同类型的半监督信号。

Comments: NAACL 2018. Originally posted to openreview 27 Oct 2017. v2 updated for NAACL camera ready

Subjects: Computation and Language (cs.CL)

Cite as: arXiv:1802.05365 [cs.CL]

(or arXiv:1802.05365v2 [cs.CL] for this version)