Transformer 代码实现及应用

Transformer_implementation_and_application 该资源仅仅用300行代码(Tensorflow 2)完整复现了Transformer模型,并且应用在神经机器翻译任务和聊天机器人上。

Attention Is All You Need

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin

(Submitted on 12 Jun 2017 (v1), last revised 6 Dec 2017 (this version, v5))

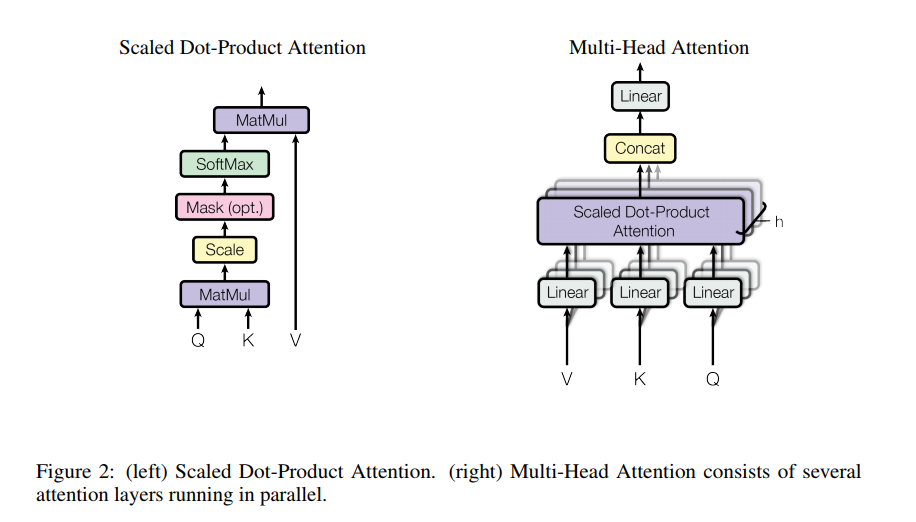

The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an encoder-decoder configuration. The best performing models also connect the encoder and decoder through an attention mechanism. We propose a new simple network architecture, the Transformer, based solely on attention mechanisms, dispensing with recurrence and convolutions entirely. Experiments on two machine translation tasks show these models to be superior in quality while being more parallelizable and requiring significantly less time to train. Our model achieves 28.4 BLEU on the WMT 2014 English-to-German translation task, improving over the existing best results, including ensembles by over 2 BLEU. On the WMT 2014 English-to-French translation task, our model establishes a new single-model state-of-the-art BLEU score of 41.8 after training for 3.5 days on eight GPUs, a small fraction of the training costs of the best models from the literature. We show that the Transformer generalizes well to other tasks by applying it successfully to English constituency parsing both with large and limited training data.

Comments: 15 pages, 5 figures

Subjects: Computation and Language (cs.CL); Machine Learning (cs.LG)

Cite as: arXiv:1706.03762 [cs.CL]

(or arXiv:1706.03762v5 [cs.CL] for this version)

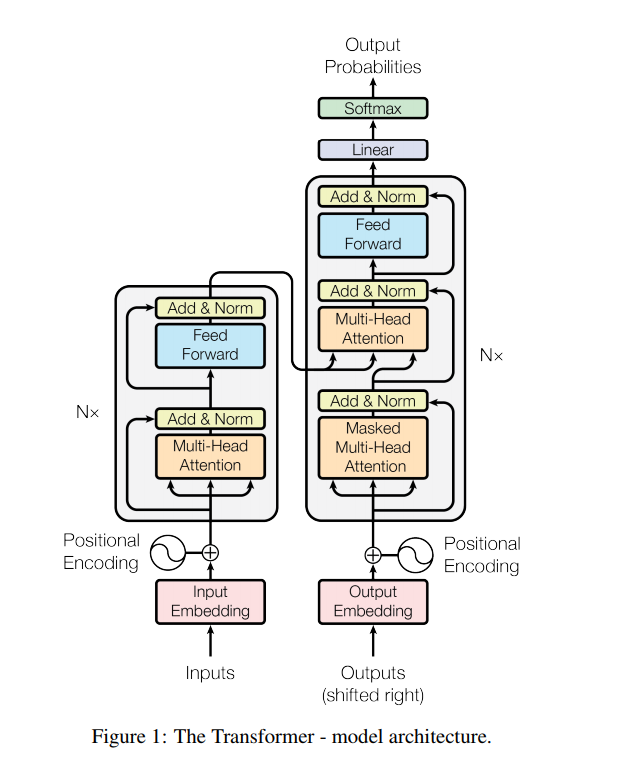

Transformer 模型结构图

| 标题 | 说明 | 附加 |

|---|---|---|

| Attention Is All You Need | 原始论文 | 20170612 |

| The Illustrated Transformer | Transformer基础解读 |

代码实现列表

| 名字 | 说明 | 时间 |

|---|---|---|

| transformer.ipynb | Tensorflow官方实现 | 持续更新 |

| bert_language_understanding | Pre-training of Deep Bidirectional Transformers for Language Understanding: pre-train TextCNN | 20181116 |

| tensorflow/tensor2tensor/tensor2tensor/models/transformer.py | tensor2tensor官方实现 | 持续更新 |

| google-research/bert/modeling.py | BERT官方实现,This is almost an exact implementation of the original Transformer encoder. In practice, the multi-headed attention are done with transposes and reshapes rather than actual separate tensors. | 持续更新 |

| Baidu transformer | 百度实现,解析 | 持续更新 |