图像风格迁移的现实应用

Prisma修图APP

Building the future of photo and video editing.

实验

- Image-Captioning CNN-Encoder and RNN-Decoder (Bahdanau Attention) for image caption or image to text on MS-COCO dataset.

- Image_to_Text

Taking the image description task on the MS-COCO data set as an example, the template code of Image_to_Text is shown. - 图像风格转移

阅读资料

| 标题 | 内容 | 时间 |

|---|---|---|

| 图像风格迁移(Neural Style)简史 | 总结1960-2015图像风格迁移历史 | 20180104 |

| 寻找下一款Prisma APP:深度学习在图像处理中的应用探讨 | 主要内容包括:传统的图像处理:如超分辨、灰度图彩色化、2D/3D转换等;图像/视频风格化;图像生成。 | 20190526 |

图像风格迁移研究进展

more info see Neural-Style-Transfer-Papers

2015 Paper A Neural Algorithm of Artistic Style

In fine art, especially painting, humans have mastered the skill to create unique visual experiences through composing a complex interplay between the content and style of an image. Thus far the algorithmic basis of this process is unknown and there exists no artificial system with similar capabilities. However, in other key areas of visual perception such as object and face recognition near-human performance was recently demonstrated by a class of biologically inspired vision models called Deep Neural Networks. Here we introduce an artificial system based on a Deep Neural Network that creates artistic images of high perceptual quality. The system uses neural representations to separate and recombine content and style of arbitrary images, providing a neural algorithm for the creation of artistic images. Moreover, in light of the striking similarities between performance-optimised artificial neural networks and biological vision, our work offers a path forward to an algorithmic understanding of how humans create and perceive artistic imagery.

在美术,尤其是绘画中,人类通过在图像的内容和风格之间构成复杂的相互作用,掌握了创造独特视觉体验的技能。到目前为止,该过程的算法基础是未知的,并且不存在具有类似能力的人工系统。然而,在视觉感知的其他关键领域,例如物体和人脸识别,近乎人类表现最近由一类被称为深度神经网络的生物学启发的视觉模型证明。在这里,我们介绍一个基于深度神经网络的人工系统,创建高感知质量的艺术图像。该系统使用神经表示来分离和重新组合任意图像的内容和风格,为艺术图像的创建提供神经算法。此外,鉴于性能优化的人工神经网络与生物视觉之间惊人的相似性,我们的工作为算法理解人类如何创造和感知艺术图像提供了一条前进的道路。

相关资源

| 标题 | 说明 |

|---|---|

| 一个艺术风格化的神经网络算法 | 论文中文翻译 |

| Neural-Style-Transfer | Keras Implementation of Neural Style Transfer from the paper “A Neural Algorithm of Artistic Style” (http://arxiv.org/abs/1508.06576) in Keras 2.0+ |

2017 GitHub deep-photo-styletransfer

Code and data for paper Deep Photo Style Transfer

This paper introduces a deep-learning approach to photographic style transfer that handles a large variety of image content while faithfully transferring the reference style. Our approach builds upon the recent work on painterly transfer that separates style from the content of an image by considering different layers of a neural network. However, as is, this approach is not suitable for photorealistic style transfer. Even when both the input and reference images are photographs, the output still exhibits distortions reminiscent of a painting. Our contribution is to constrain the transformation from the input to the output to be locally affine in colorspace, and to express this constraint as a custom fully differentiable energy term. We show that this approach successfully suppresses distortion and yields satisfying photorealistic style transfers in a broad variety of scenarios, including transfer of the time of day, weather, season, and artistic edits.

本文介绍了一种深度学习的摄影风格转换方法,可以处理各种图像内容,同时忠实地传递参考风格。 我们的方法建立在最近的绘画转移工作的基础上,通过考虑神经网络的不同层,将风格与图像内容分开。 但是,这种方法不适合照片般逼真的风格转换。 即使输入和参考图像都是照片,输出仍然会呈现出与绘画相似的扭曲。 我们的贡献是限制从输入到输出的转换在颜色空间中局部仿射,并将该约束表达为定制的完全可微分的能量项。 我们表明,这种方法成功地抑制了失真,并在各种场景中产生令人满意的逼真风格转换,包括时间,天气,季节和艺术编辑的转移。

Examples

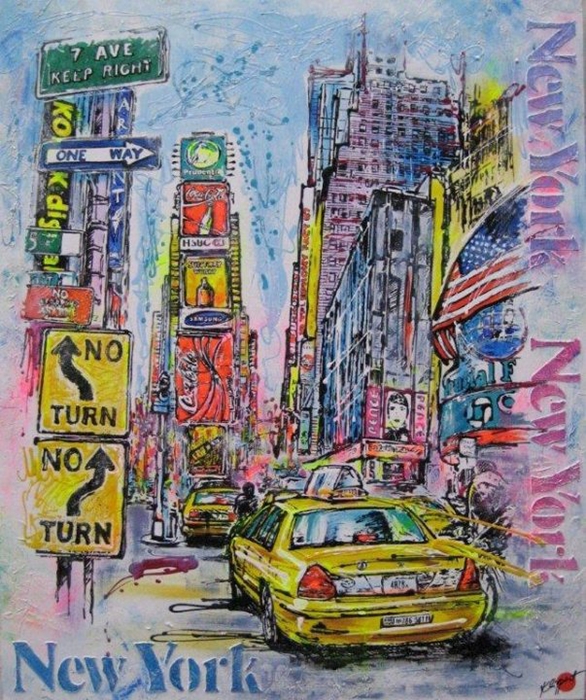

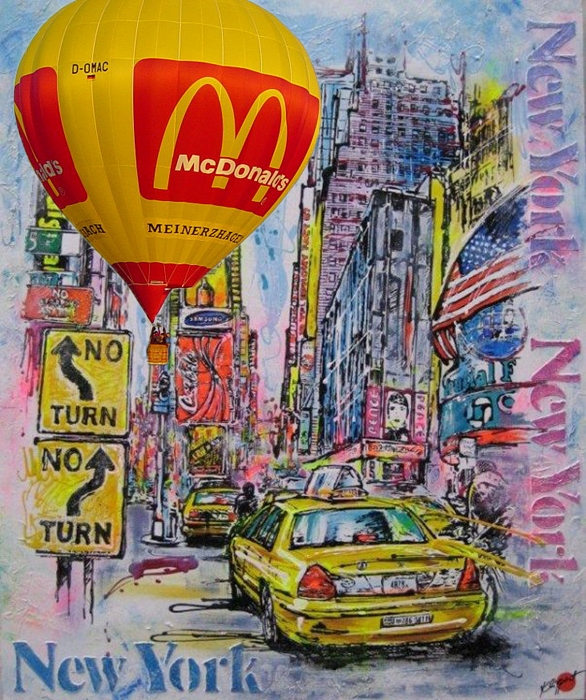

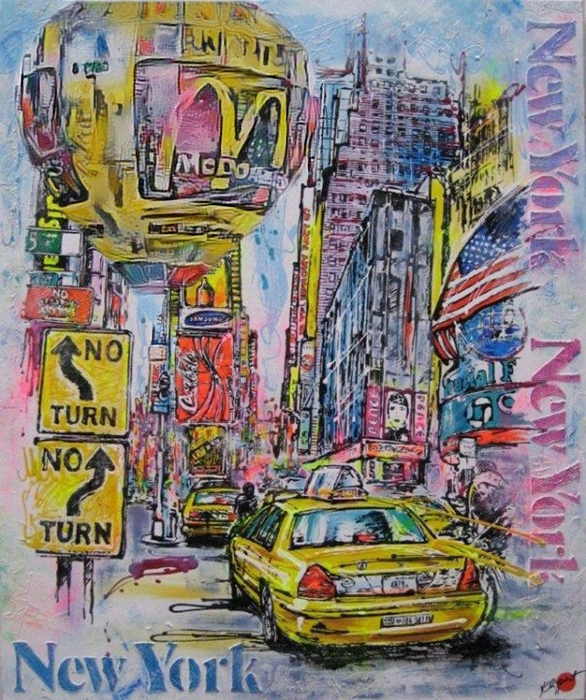

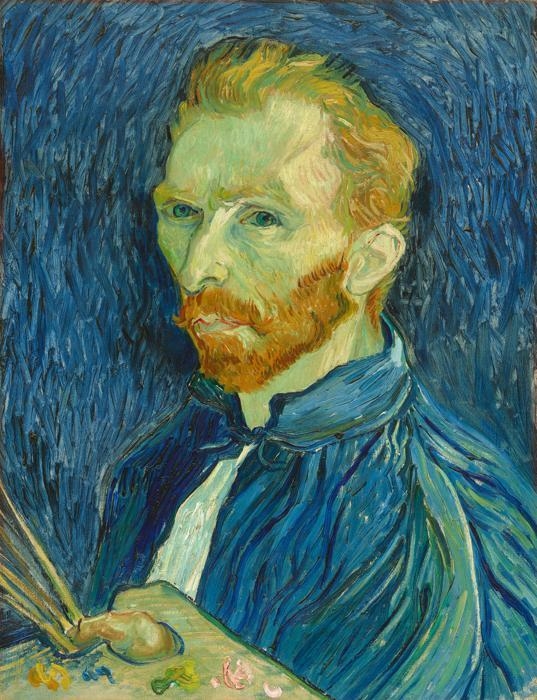

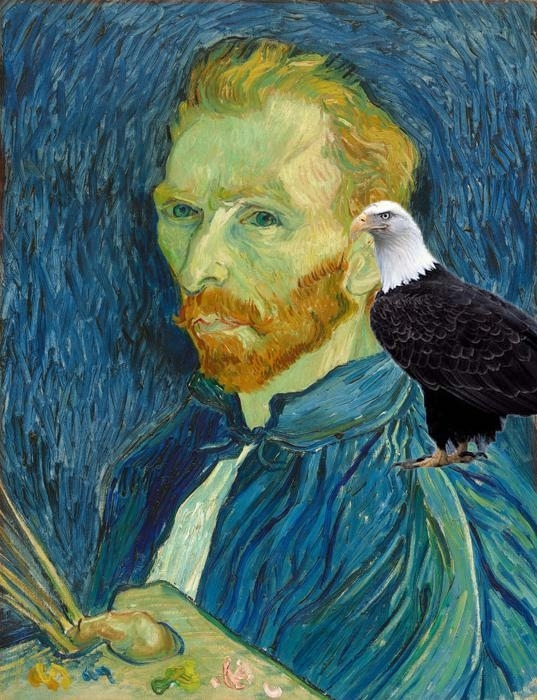

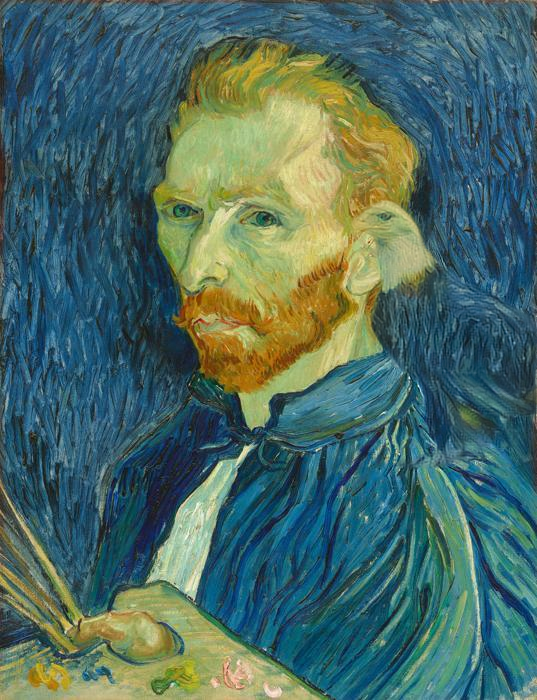

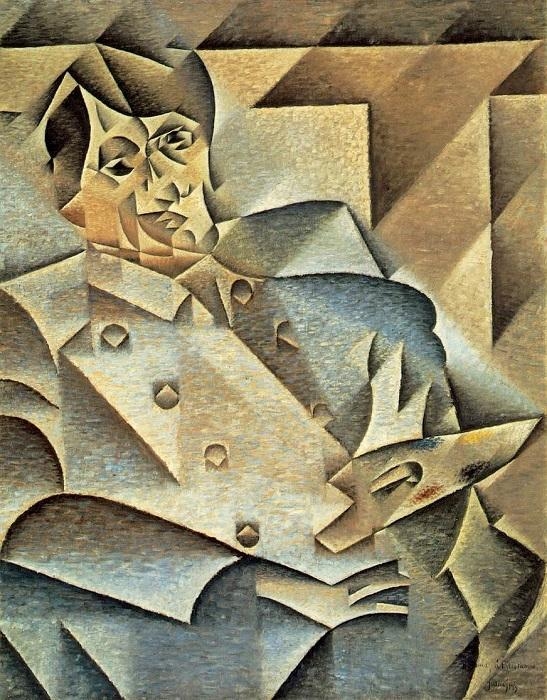

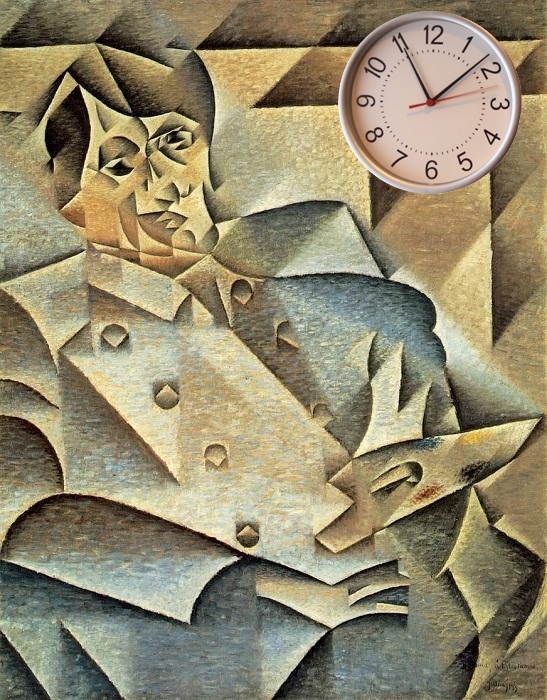

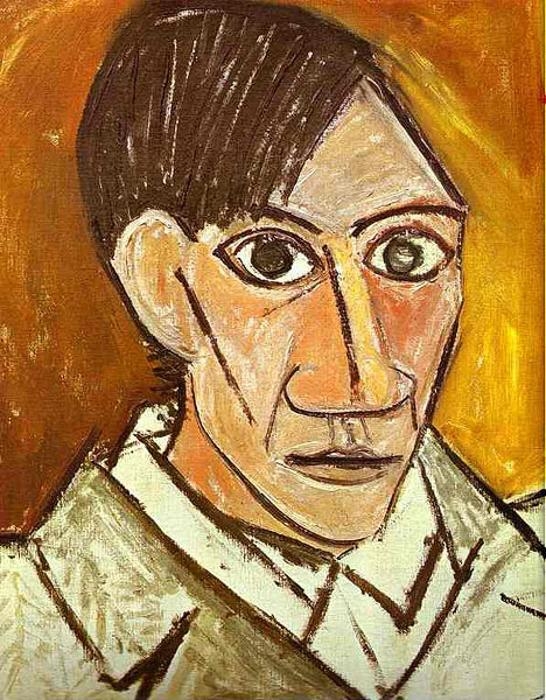

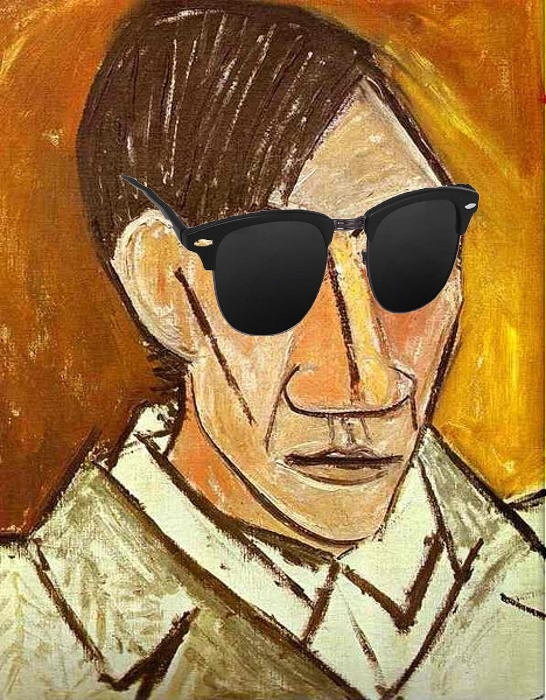

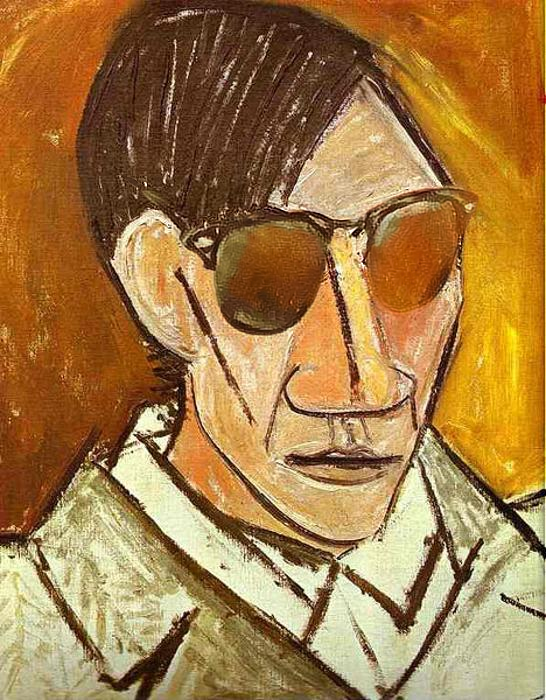

Here are some results from our algorithm (from left to right are input, style and our output):

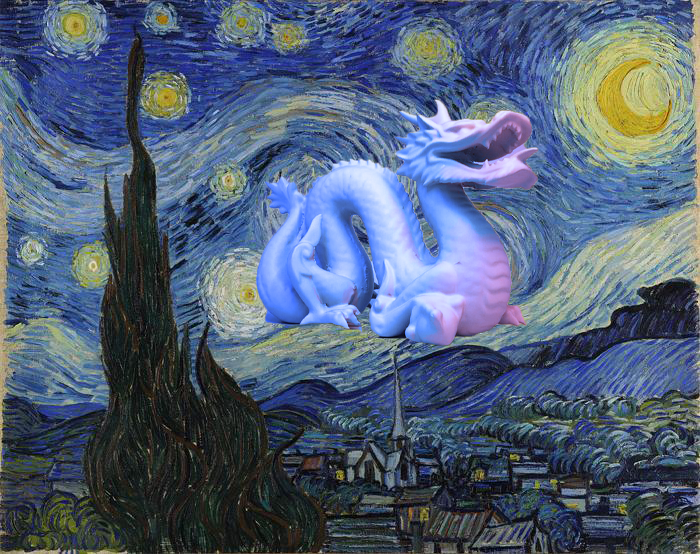

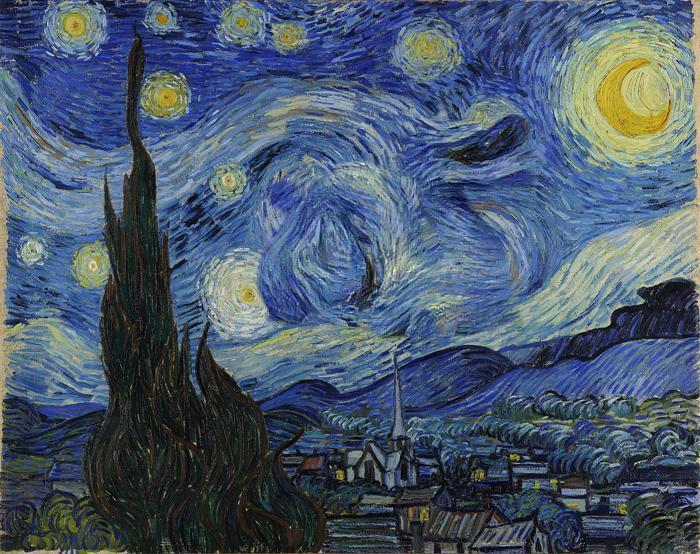

2018 GitHub deep-painterly-harmonization

Code and data for paper Deep Painterly Harmonization

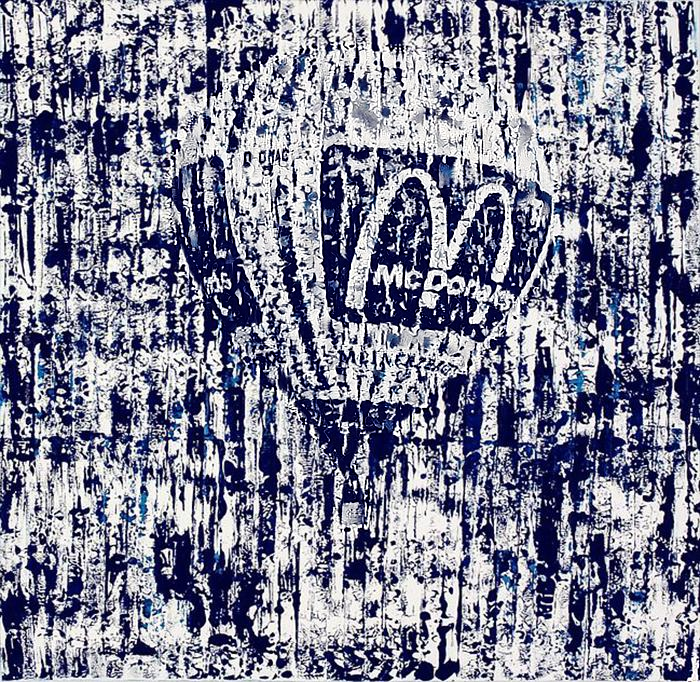

Here are some results from our algorithm (from left to right are original painting, naive composite and our output):

2018 GitHub stroke-controllable-fast-style-transfer

code for papaer Stroke Controllable Fast Style Transfer with Adaptive Receptive Fields

hotograph to an artistic style in real-time. This task involves controlling the stroke size in the stylized results, which remains an open challenge. In this paper, we present a stroke controllable style transfer network that can achieve continuous and spatial stroke size control. By analyzing the factors that influence the stroke size, we propose to explicitly account for the receptive field and the style image scales. We propose a StrokePyramid module to endow the network with adaptive receptive fields, and two training strategies to achieve faster convergence and augment new stroke sizes upon a trained model respectively. By combining the proposed runtime control strategies, our network can achieve continuous changes in stroke sizes and produce distinct stroke sizes in different spatial regions within the same output image.

最近提出了快速风格转移方法以实时地将照片转换为艺术风格。 该任务涉及控制程式化结果中的笔划大小,这仍然是一个开放的挑战。 在本文中,我们提出了一种可以实现连续和空间行程尺寸控制的笔画可控式传送网络。 通过分析影响笔画大小的因素,我们建议明确考虑感受野和风格图像尺度。 我们提出了一个StrokePyramid模块,为网络赋予了自适应感受域,以及两种训练策略,分别在训练模型上实现更快的收敛和增加新的笔画大小。 通过组合所提出的运行时控制策略,我们的网络可以实现笔划大小的连续变化,并在同一输出图像内的不同空间区域中产生不同的笔划大小。

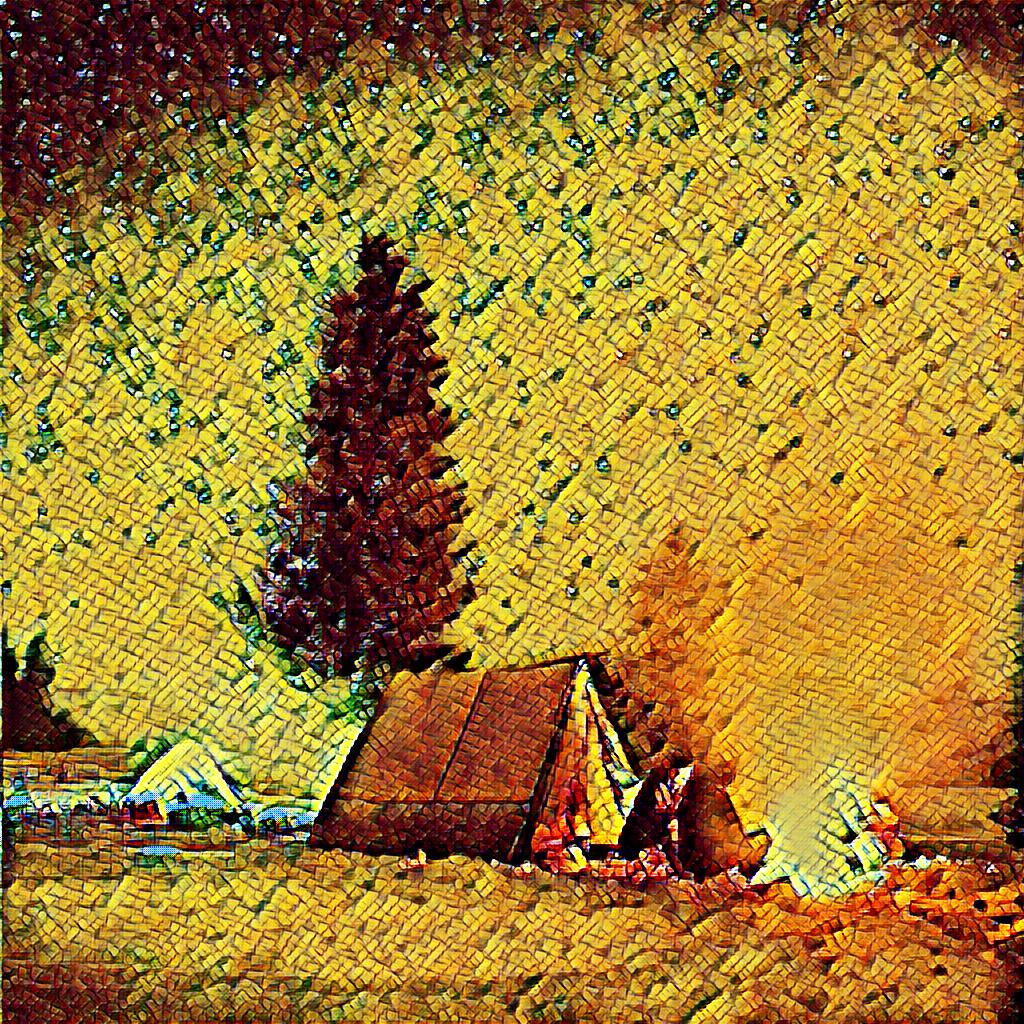

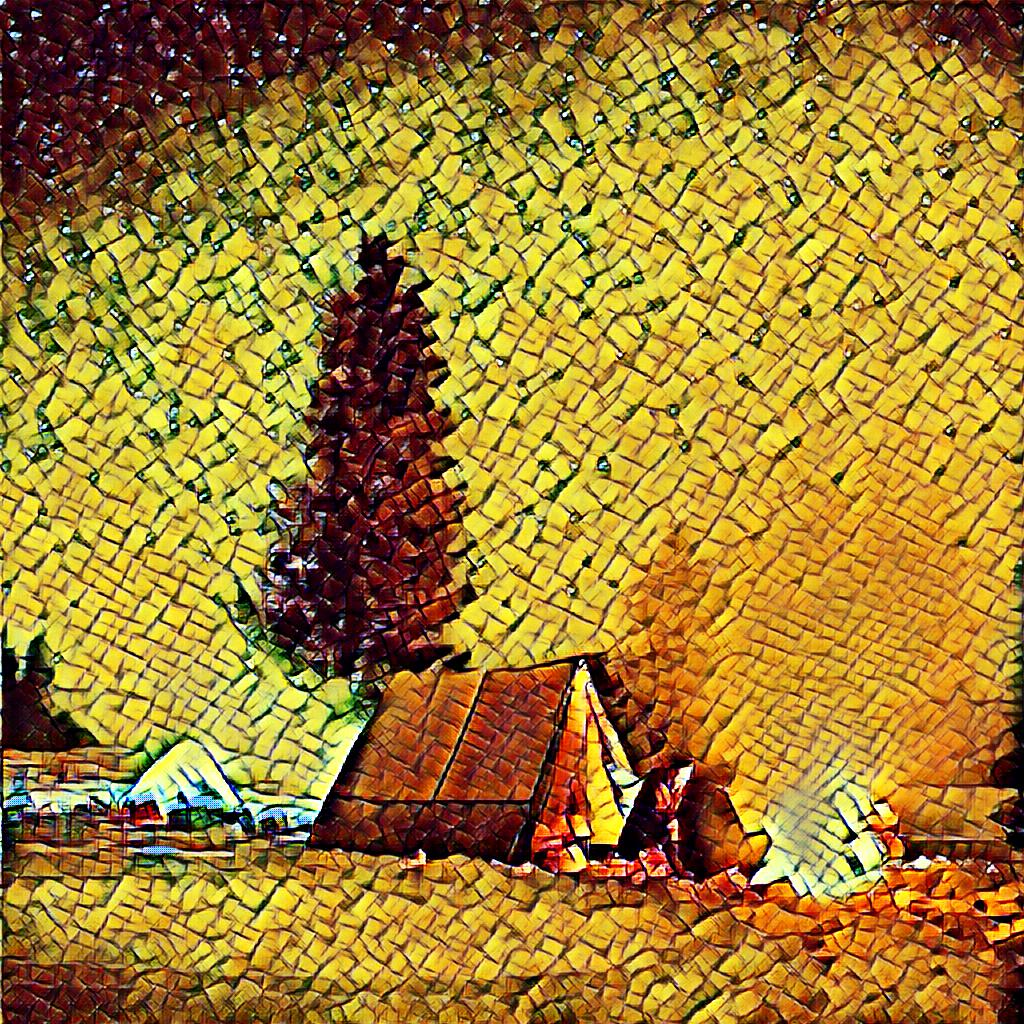

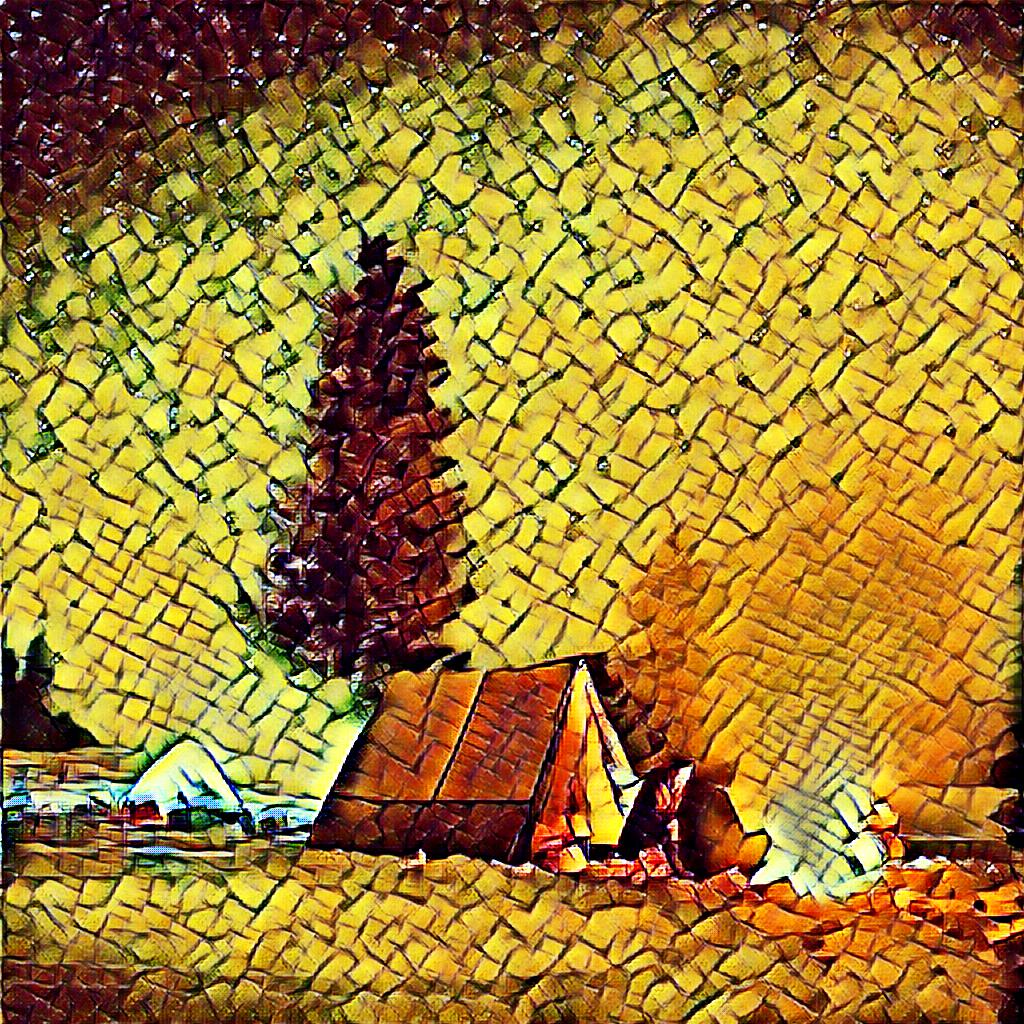

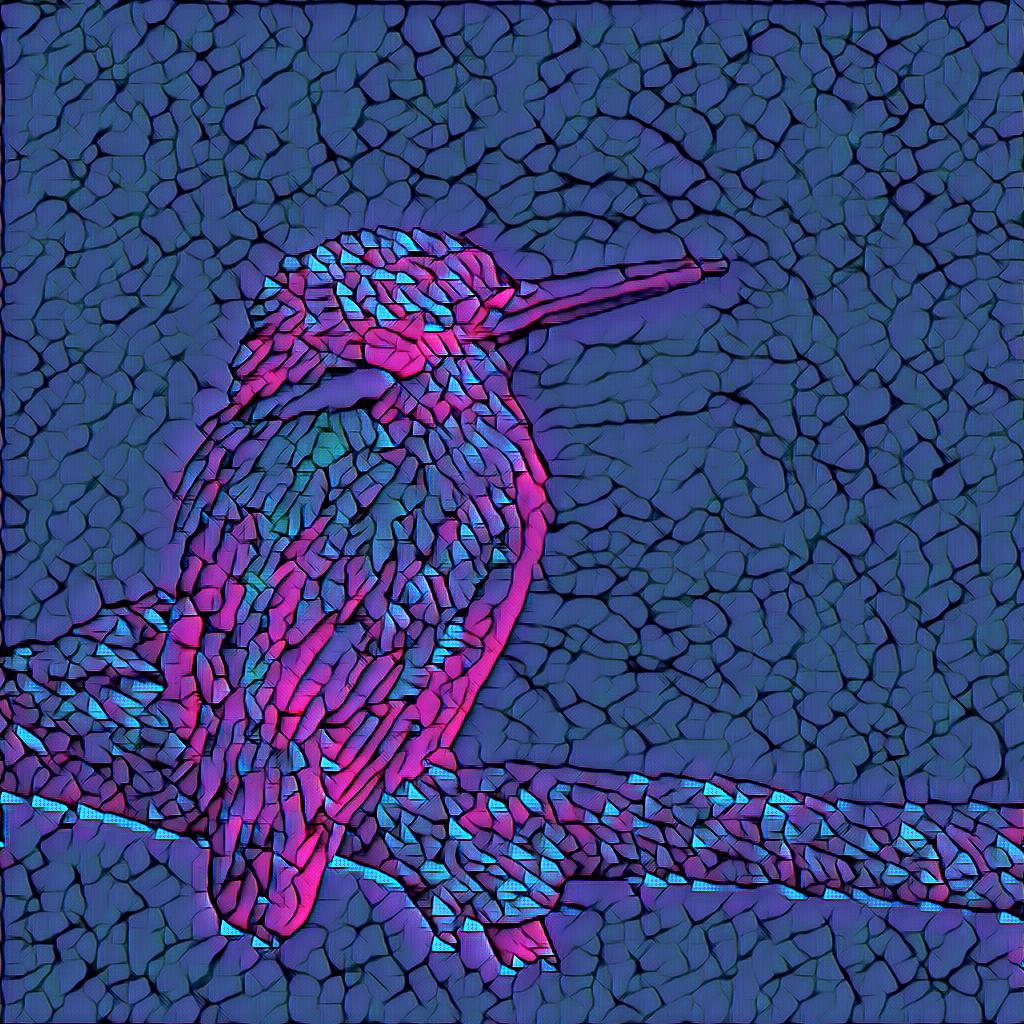

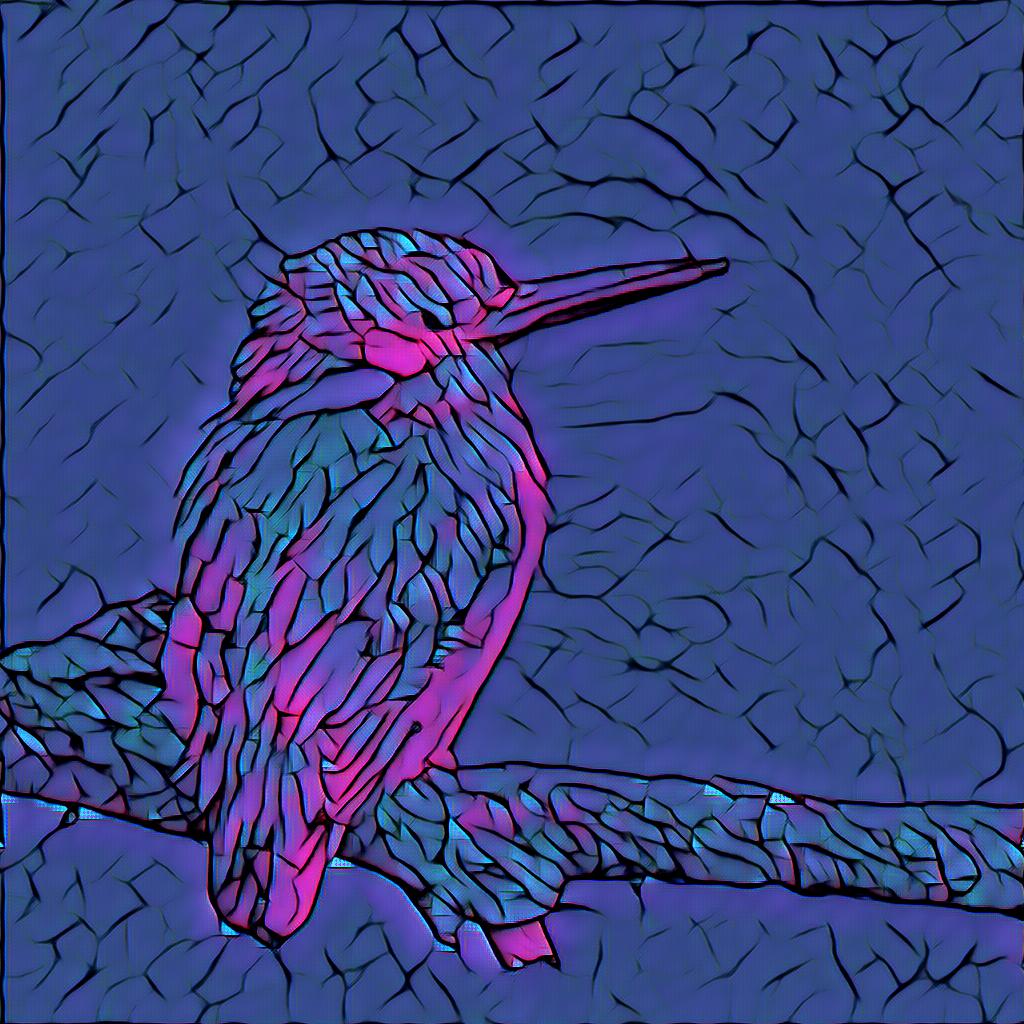

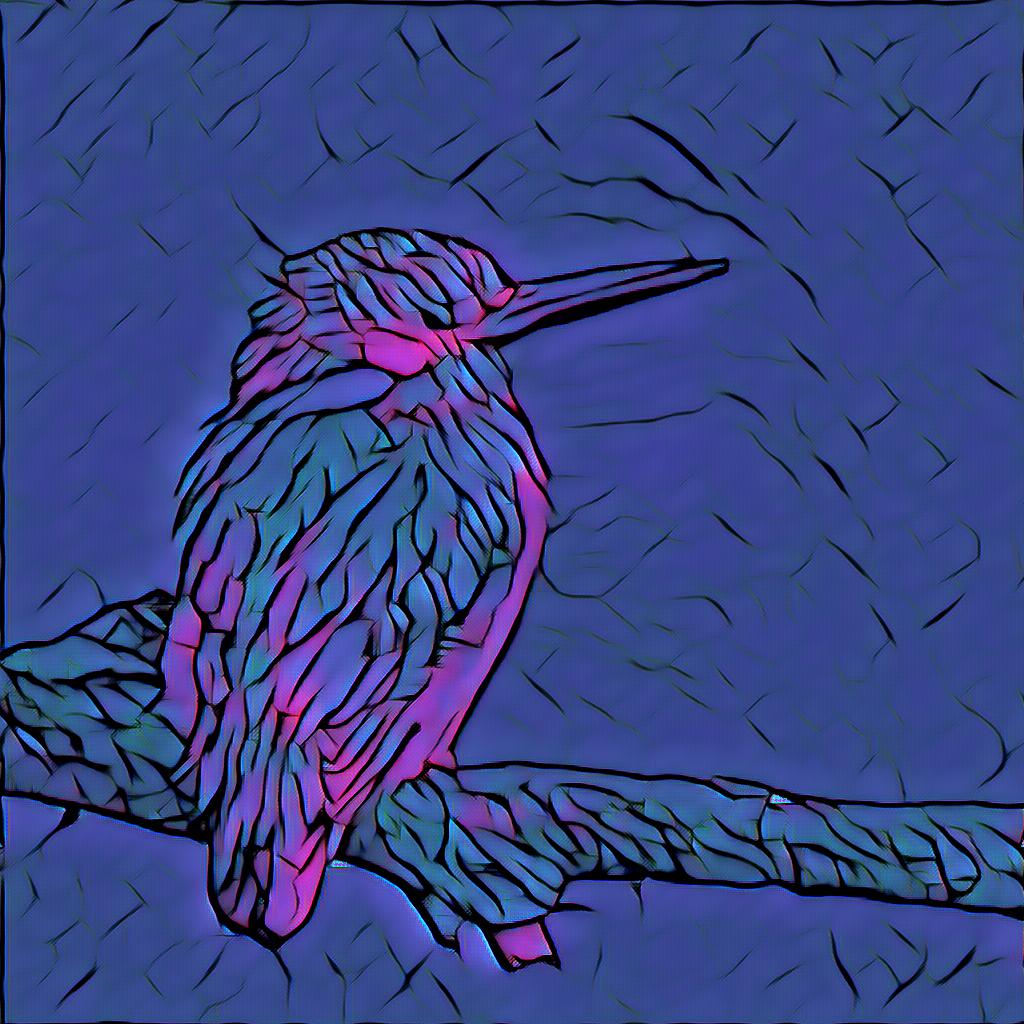

examples

From left to right are content, style, 256-stroke-size result, 512-stroke-size result, 768-stroke-size result.